AI Bias Reflects and Amplifies Human Bias

As artificial intelligence applications become increasingly prevalent in fields like criminal justice, healthcare, and education, concerns are rising about unfair biases being learned and amplified by these systems. Left unaddressed, biases in AI risk enabling discrimination and lost opportunities that impact BIPOC and underrepresented groups for years to come.

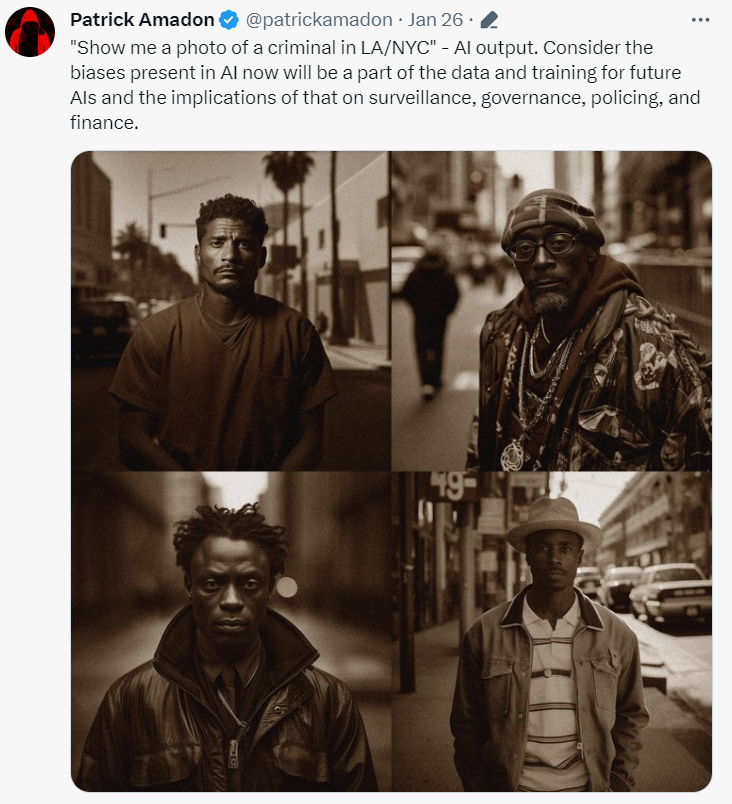

Some examples of AI generated images from Midjourney help illustrate this worrying possibility. Artist Patrick Amadon has been bringing awareness to racial and gender bias in AI on his X profile using prompts to illustrate growing concerns.

Law enforcement has been using machine learning to profile and predict future criminals for years, according to a ProPublica article from 2016. If an AI system used for enhanced surveillance is trained on data that disproportionately associates criminal appearances with certain racial groups, it could result in those minorities being disproportionately targeted for increased surveillance and police intervention. Left uncorrected, such subtle biases could unconsciously influence policing and human decision-makers and continue to negatively shape societal attitudes over time.

“If AI thinks criminals disproportionately look like minorities, AI-enhanced surveillance is going to disproportionately target minorities. If AI thinks the babies with the most potential are white with blue eyes, those babies will get advantages. If AI thinks the most likely to be successful young adults are white and good looking, they’re going to be prioritized. We’re baking this all in now for training future AI models… we’re collectively deciding what our future looks like now.”

— Patrick Amadon, digital artist

Representational Harms

Efraim Lerner, founder of Br1ne is an Aussie educator and rabbi, who works with AI to create personalized learning experiences. Lerner noticed the inherent biases and representational harms when he prompted the AI image generators to “create an image of a Jewish studies class in England.”

Many of the images came back stereotypically Jewish based on the AI’s data sets. Lerner demonstrated how AI images reflect inherent biases without localized training data. He explained how general data risks promoting stereotypes over customized understanding while tailored, high-quality datasets better serve community needs. Editor’s note: Efraim clarified that “localised data alone could also create and perpetuate biases (it’s kind of a double edged sword).”

“It’s assuming all Jewish students have to be boys,” says Lerner. “There’s a lot of biases here. It’s getting the audience to think about those biases.” He highlights the potential harm of this to those who might be unfamiliar with Jewish communities.

“I chose a topic that I know quite well because I’m coming from my [Jewish] community. What I’ve grown up with. But if someone wasn’t from that community, think about how many biases they might be exposed to without themselves realizing it.”

— Efraim Lerner, Founder of Br1ne

In addition to enabling racial discrimination, biases in AI also risk perpetuating harmful gender stereotypes. A recent study in Science journal reveals that algorithms readily adopt certain implicit biases observed in human psychology experiments. For instance, terms like “female” and “woman” tend to be closely linked with arts, humanities occupations, and domestic roles, whereas “male” and “man” are more associated with math, engineering professions, and other technical fields.

These biases are often reflected in AI generated images. Ask Midjourney to generate a portrait of a CEO, and it will likely regurgitate a white male in a suit.

Can we blame it on the AI?

Is the AI prejudiced? Not exactly. AI learns from historical data sets and algorithms created by humans. We decided to ask Claude AI to comment:

According to the London Interdisciplinary School, the AI will exacerbate information from historic datasets and amplify existing biases through feedback loops.

Avish Parmar, AI research intern at Brookhaven National Laboratory and independent researcher at Stony Brook University has worked on Explainable AI (XAI) and machine learning projects for nanotechnology and Natural Language Processing (NLP).

Parmar explains, “AI systems typically amplify biases learned from historical data through several avenues. First, it is possible for a bias to exist in the training data. If said historical data contains biases, such as racial or gender disparities, the model will learn and replicate these biases in its predictions. Furthermore, in models that are continuously trained on real time data such as Google Bard, it is possible for biased decisions made by humans to reinforce existing biases in the data.”

Returning to the issue of AI generated CEO images predominantly featuring white males, this is due to the AI drawing from historical data sets. When the AI recognizes that a significant majority of CEOs historically have been men, it relies on this data to generate its images.

Is debiasing an effective solution?

Researchers face challenges in developing truly debiased AI models due to the complexity of biases, which can be explicit like labeled data annotations or implicit correlations in the data.

Techniques under investigation include fair representation learning to learn invariant embeddings, preprocessing techniques like reweighting biased samples, and algorithmic constraints penalizing harm against groups, but these do not fully address all biases as they can introduce new biases. “While these techniques have been successful in mitigating biases, they do not fully address all forms of bias as they themselves potentially introduce new bias to the data,” says Parmar.

Fair representation learning seems to be the most promising to address representational harms, says Parmar, “as they aim to not just clean the data but also constrain the model from making harmful predictions.”

Diversity among AI researchers is also an important consideration. Parmar states, “the presence of individuals from diverse backgrounds among the AI community can bring unique perspectives and cultural insights to the development and evaluation of AI systems. This diversity can help uncover biases that may be overlooked by homogenous teams and promote inclusive solutions.”

Legislation is also an imperfect solution, as many government leaders are not equipped to understand the rapidly evolving technology. “A complementary strategy would be to develop industry standards and best practices for responsible AI development, which can help fill gaps in legislation,” says Parmar.

What can we do?

As researchers scramble to develop techniques like data debiasing, model oversight, and diversifying the AI workforce to remedy these issues, the rapid deployment of AI in high-stakes domains means biases may already be baked into systems guiding important decisions. If discriminatory biases aren’t adequately addressed, experts fear AI could amplify unfair disadvantages well into the future.

As AI becomes increasingly integrated into society, our collective responsibility to prevent these technologies from worsening inequalities grows ever more pressing. It appears that the primary issue of unconscious bias in AI stems from human biases, highlighting a need to address societal problems at their core.

Parmar added, “While companies have had hiring practices that specifically aim toward diversifying their work force there are some other measures they can take as well. Namely, they can provide diversity training and education for their employees. These training programs can cover topics such as unconscious bias, cultural competence, and inclusive communication to promote diversity in the AI workforce. Lastly, collaborating with external organizations such as ColorStack can help organizations access diverse talent pools and build partnerships to advance their diversity goals.”

No solution will be perfect, but with open challenge and multilevel efforts around data, systems design, employee training and social change, the potential impacts of AI can be guided toward empowering all groups rather than exacerbating existing inequities. Continued discussion and progress on these complex challenges will better ensure emerging technologies live up to their potential to benefit humanity.

Nevertheless, it is our responsibility, collectively, to contribute towards creating a more equitable world, thereby reducing bias in training data.

Emily Carrig is Founder & Editor-in-Chief of True Stars Media. She has a dedicated curiosity for delving into concepts surrounding web3, creativity, and emerging technology.

Leave a comment